The Common Security Advisory Framework (CSAF) is a framework for providing machine-readable security advisories following a standardized process to enable automated cybersecurity information sharing. Greenbone is continously working on the integration of technologies that leverage the CSAF 2.0 standard for automated cybersecurity advisories. For an introduction to CSAF 2.0 and how it supports next-generation vulnerability management, you can refer to our previous blog post.

In 2024, the NIST National Vulnerabilities Database (NVD) outage has disrupted the flow of critical cybersecurity intelligence to downstream consumers. This makes the decentralized CSAF 2.0 model increasingly relevant. The outage highlights the need for a decentralized cybersecurity intelligence framework for increased resilience against a single point of failure. Those who adopt CSAF 2.0, will be one step closer to a more reliable cybersecurity intelligence ecosystem.

Table of Contents

1. What We Will Cover in this Article

2. Who Are the CSAF Stakeholders?

2.1. Understanding Roles in the CSAF 2.0 Process

2.1.1. CSAF 2.0 Issuing Parties

2.1.1.1. Understanding the CSAF Publisher Role

2.1.1.2. Understanding the CSAF Provider Role

2.1.1.3. Understanding the CSAF Trusted-Provider Role

2.1.2. CSAF 2.0 Data Aggregators

2.1.2.1. Understanding the CSAF Lister Role

2.1.2.2. Understanding the CSAF Aggregator Role

3. Summary

1. What We Will Cover in this Article

This article will provide a detailed explanation of the various stakeholders and roles defined in the CSAF 2.0 specification. These roles govern the mechanisms of creating, disseminating and consuming security advisories within the CSAF 2.0 ecosystem. By understanding who the stakeholders of CSAF are and the standardized roles defined by the CSAF 2.0 framework, security practitioners can better realize how CSAF works, whether it can serve to benefit their organization and how to implement CSAF 2.0.

2. Who Are the CSAF Stakeholders?

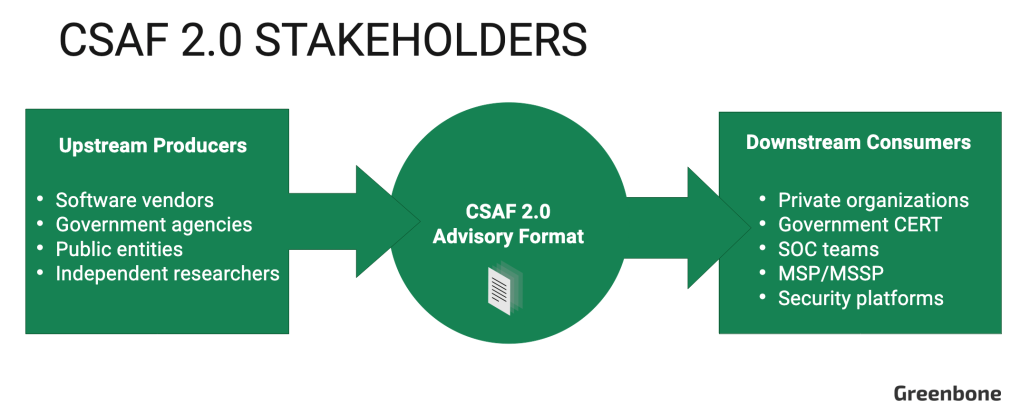

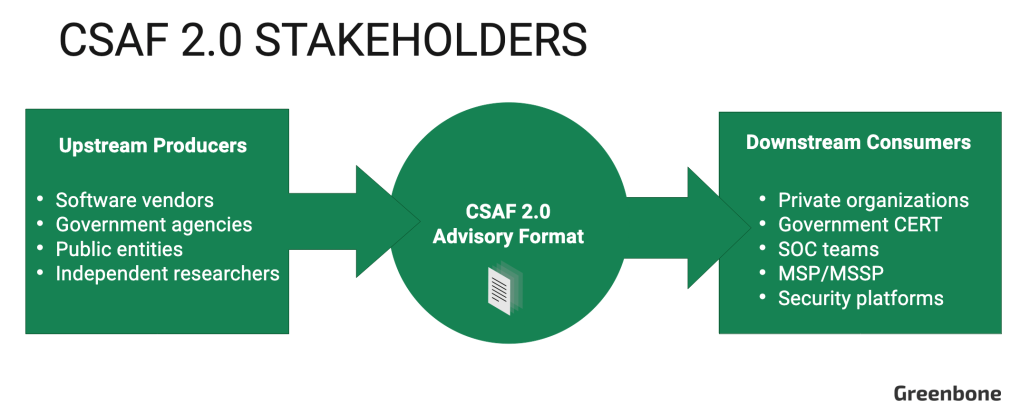

At the highest level, the CSAF process has two primary stakeholder groups: upstream producers who create and supply cybersecurity advisories in the CSAF 2.0 document format and downstream consumers (end-users) who consume the advisories and apply the security information they contain.

Upstream producers are typically software product vendors (such as Cisco, Red Hat and Oracle) who are responsible for maintaining the security of their digital products and providing publicly available information about vulnerabilities. Upstream stakeholders also include independent security researchers and public entities that act as a source for cybersecurity intelligence such as the US Cybersecurity Intelligence and Security Agency (CISA) and the German Federal Office for Information Security (BSI).

Downstream consumers consist of private corporations who manage their own cybersecurity and Managed Security Service Providers (MSSPs), third-party entities that provide outsourced cybersecurity monitoring and management. The information contained in CSAF 2.0 documents is used downstream by IT security teams to identify vulnerabilities in their infrastructure and plan remediation and by C-level executives for assessing how IT risk could negatively impact operations.

The CSAF 2.0 standard defines specific roles for upstream producers that outline their participation in creating and disseminating advisory documents. Let’s examine those officially defined roles in more detail.

2.1. Understanding Roles in the CSAF 2.0 Process

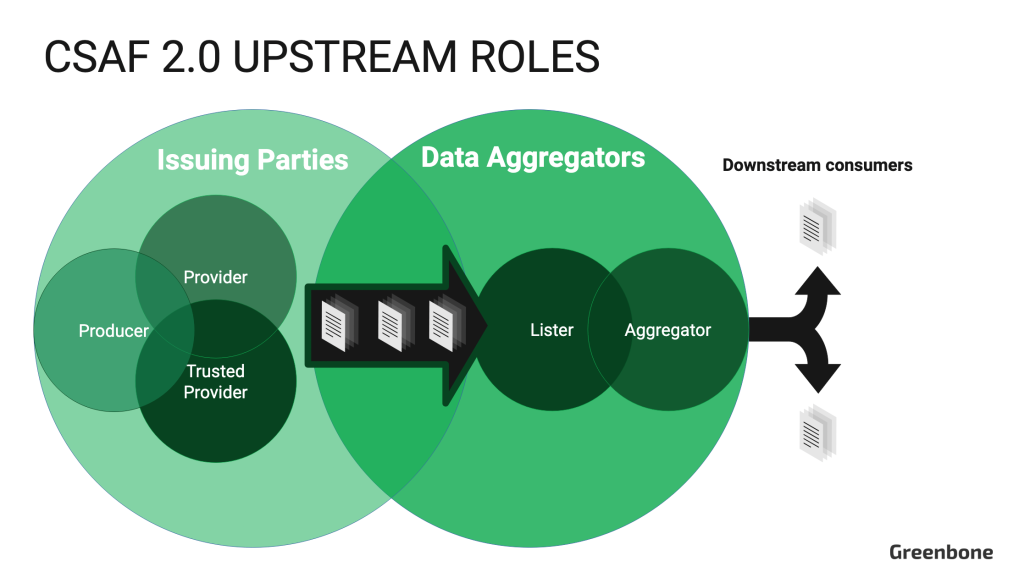

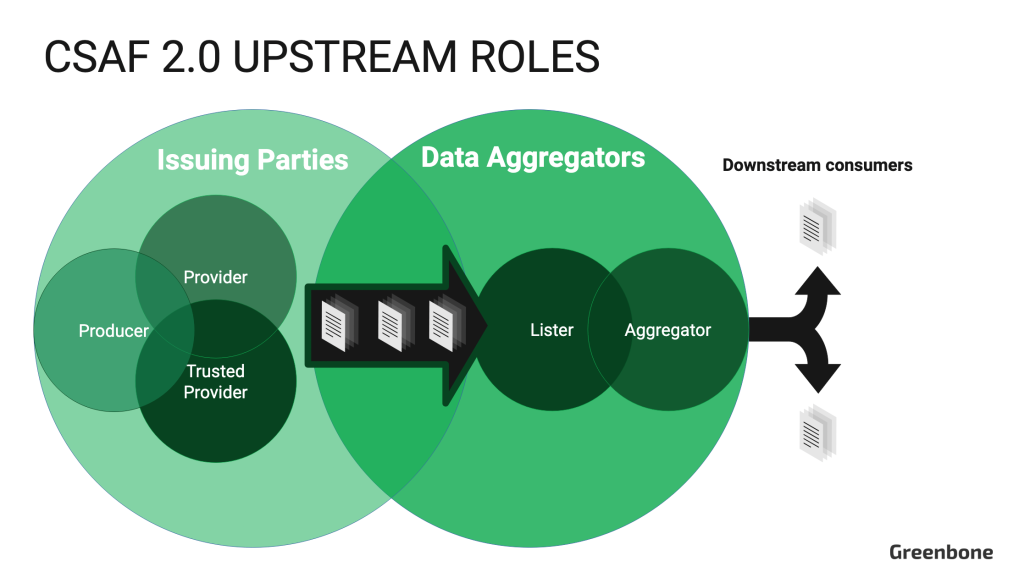

CSAF 2.0 Roles are defined in Section 7.2. They are divided into two distinct groups: Issuing Parties (“Issuers”) and Data Aggregators (“Aggregators”). Issuers are directly involved in the creation of advisory documents. Aggregators collect those documents and distribute them to end-users, supporting automation for consumers. A single organization may fulfill the roles of both an Issuer and an Aggregator, however, these functions should operate as separate entities. Obviously, organizations who act as upstream producers must also maintain their own cybersecurity. Therefore, they may also be a downstream consumer – ingesting CSAF 2.0 documents to support their own vulnerability management activities.

Next, let’s break down the specific responsibilities for CSAF 2.0 Issuing Parties and Data Aggregators.

2.1.1. CSAF 2.0 Issuing Parties

Issuing Parties are the origin of CSAF 2.0 cybersecurity advisories. However, Issuing Parties are not responsible for transmitting the documents to end-users. Issuing Parties are responsible for indicating if they do not want their advisories to be listed or mirrored by Data Aggregators. Also, CSAF 2.0 Issuing Parties can also act as Data Aggregators.

Here are explanations of each sub-role within the Issuing Parties group:

2.1.1.1. Understanding the CSAF Publisher Role

Publishers are typically organizations that discover and communicate advisories only on behalf of its own digital products. Publishers must satisfy requirements 1 to 4 in Section 7.1 of the CSAF 2.0 specification. This means issuing structured files with valid syntax and content that adhere to the CSAF 2.0 filename conventions described in Section 5.1 and ensuring that files are only available via encrypted TLS connections. Publishers must also make all advisories classified as TLP:WHITE publicly accessible.

Publishers must also have a publicly available provider-metadata.json document containing basic information about the organization, its CSAF 2.0 role status, and links to an OpenPGP public key used to digitally sign the provider-metadata.json document to verify its integrity. This information about the Publisher is used downstream by software apps that display the publisher’s advisories to end-users.

2.1.1.2. Understanding the CSAF Provider Role

Providers make CSAF 2.0 documents available to the broader community. In addition to meeting all the same requirements as a Publisher, a Provider must provide its provider-metadata.json file according to a standardized method (at least one of the requirements 8 to 10 from Section 7.1), employ standardized distribution for its advisories, and implement technical controls to restrict access to any advisory documents with a TLP:AMBER or TLP:RED status.

Providers must also choose to distribute documents in either a directory-based or the ROLIE-based method. Simply put, directory-based distribution makes advisory documents available in a normal directory path structure, while ROLIE (Resource-Oriented Lightweight Information Exchange) [RFC-8322] is a RESTful API protocol designed specifically for security automation, information publication, discovery and sharing.

If a Provider uses the ROLIE-based distribution, it must also satisfy requirements 15 to 17 from Section 7.1. Alternatively, if a Provider uses the directory-based distribution it must satisfy requirements 11 to 14 from Section 7.1.

2.1.1.3. Understanding the CSAF Trusted-Provider Role

Trusted-Providers are a special class of CSAF Providers who have established a high level of trust and reliability. They must adhere to stringent security and quality standards to ensure the integrity of the CSAF documents they issue.

In addition to meeting all the requirements of a CSAF Provider, Trusted-Providers must also satisfy the requirements 18 to 20 from Section 7.1 of the CSAF 2.0 specification. These requirements include providing a secure cryptographic hash and OpenPGP signature file for each CSAF document issued and ensuring the public part of the OpenPGP signing key is made publicly available.

2.1.2. CSAF 2.0 Data Aggregators

Data Aggregators focus on the collection and redistribution of CSAF documents. They act as a directory for CSAF 2.0 Issuers and their advisory documents and intermediary between Issuers and end-users. A single entity may act as both a CSAF Lister and Aggregator. Data Aggregators may choose which upstream Publishers’ advisories to list or collect and redistribute based on their customer’s needs.

Here are explanations of each sub-role in the Data Aggregator group:

2.1.2.1. Understanding the CSAF Lister Role

Listers gather CSAF documents from multiple CSAF Publishers and list them in a centralized location to facilitate retrieval. The purpose of a Lister is to act as a sort of directory for CSAF 2.0 advisories by consolidating URLs where CSAF documents can be accessed. No Lister is assumed to provide a complete set of all CSAF documents.

Listers must publish a valid aggregator.json file that lists at least two separate CSAF Provider entities and while a Lister may also act as an Issuing Party, it may not list mirrors pointing to a domain under its own control.

2.1.2.2. Understanding the CSAF Aggregator Role

The CSAF Aggregator role represents the final waypoint between published CSAF 2.0 advisory documents and the end-user. Aggregators provide a location where CSAF documents can be retrieved by an automated tool. Although Aggregators act as a consolidated source of cybersecurity advisories, comparable to NIST NVD or The MITRE Corporation’s CVE.org, CSAF 2.0 is a decentralized model as opposed to a centralized model. Aggregators are not required to offer a comprehensive list of CSAF documents from all Publishers. Also, Publishers may provide free access to their CSAF advisory feed, or operate as a paid service.

Similarly to Listers, Aggregators must make an aggregator.json file available publicly and CSAF documents from each mirrored Issuer must be placed in a separate dedicated folder along with the Issuer’s provider-metadata.json. Essentially, Aggregators must satisfy the requirements 1 to 6 and 21 to 23 from Section 7.1 of the CSAF 2.0 specification.

CSAF Aggregators are also responsible for ensuring that each mirrored CSAF document has a valid signature (requirement 19) and a secure cryptographic hash (requirement 18). If the Issuing Party does not provide these files, the Aggregator must generate them.

3. Summary

Understanding CSAF 2.0 stakeholders and roles is essential for ensuring proper implementation of CSAF 2.0 and to benefit from automated collection and consumption of critical cybersecurity information. The CSAF 2.0 specification defines two main stakeholder groups: upstream producers, responsible for creating cybersecurity advisories, and downstream consumers, who apply this information to enhance security. Roles within CSAF 2.0 include Issuing Parties, such as Publishers, Providers, and Trusted-Providers to who generate and distribute advisories, and Data Aggregators, like Listers and Aggregators, who collect and disseminate these advisories to end-users.

Members of each role must adhere to specific security controls that support the secure transmission of CSAF 2.0 documents, and the Traffic Light Protocol (TLP) governs how documents are authorized to be shared and the required access controls.